JavaScript Test Code Coverage in Rails

In modern apps, it’s common to enhance the user experience with JavaScript. Whether it’s just some JavaScript sprinkles here and there or a full JS-based frontend, this is as important as your Ruby code when it comes to the app’s correct functionality. In this article we’ll show how to measure the test code coverage for the JavaScript code when running system/integration tests along with the Ruby code coverage.

Sample App

We created a sample application using React, TypeScript and Vanilla JavaScript code, and both RSpec and MiniTest examples that you can use for reference. This is the repository https://github.com/fastruby/js-coverage-sample-app .

Requirements

In order to be able to measure the JavaScript code coverage, we will use the Istanbul instrumentation library, that means that this guide works only if you use a JavaScript bundler that supports it (Webpacker for example), this won’t work with Sprockets.

Instrumentation

JS Code

In order to measure which code is executed, we need to instrument the code. For that we will use the Istanbul library which takes care of keeping track of each line that’s executed and stores that information in the window object as window.__coverage__.

We have to add the node package for the Istanbul loader with yarn add istanbul-instrumenter-loader --dev and then configure webpack to use the loader for the desired files:

// config/webpack/environment.js

const { environment } = require("@rails/webpacker");

if (process.env.RAILS_ENV === "test") {

environment.loaders.append("istanbul-instrumenter", {

test: /(\.js)$|(\.jsx)$|(\.ts)$|(\.tsx)$/,

use: {

loader: "istanbul-instrumenter-loader",

options: { esModules: true },

},

enforce: "post",

exclude: /node_modules/,

});

}

module.exports = environment;

Note that we are adding the configuration in the generic

environment.jsfile instead of thetest.jsfile. Thetest.jsfile seems to not be executed when running tests at least on our tests.

You can run the Rails app in the

testenvironment withRAILS_ENV=test NODE_ENV=test rails sif you want to see thewindow.__coverage__information in action using the DevTools console.

Ruby Code

For the Ruby code coverage, we will use Simplecov .

First we have to add the gem to our gemfile with gem 'simplecov', require: false.

Then we update the test environment config with:

# config/environment/test.rb

if ENV["RAILS_ENV"] == "test" && ENV["COVERAGE"]

require "simplecov"

end

And we’ll use a .simplecov file with this content:

# .simplecov

SimpleCov.start "rails"

We are starting SimpleCov like this because we’ll add more things to this config file later.

JS Coverage Data After Each Test

When Rails runs our system tests, it opens a new browser window and applies the actions that we coded using Capybara. During that test run, Istanbul will keep track of the executed lines in the window.__coverage__ object during the execution of each example. This information will be available only during each test, so we need to extract that after each example is run.

To store the results after each test, we run a block of code after each system test, we get the value from the current test’s window object, and store it in a special .nyc_output directory.

First we have to update our test environment config with this at the top of the config/environments/test.rb file:

# config/environment/test.rb

# if running tests and we want the code coverage, include `simplecov` and prepare the directories

if ENV["RAILS_ENV"] == "test" && ENV["COVERAGE"]

require "simplecov"

FileUtils.mkdir_p(".nyc_output") # make sure the directory exists

Dir.glob("./.nyc_output/*").each{ |f| FileUtils.rm(f) } # clear results from previous runs

end

# this function should be executed when we want to store the current `window.__coverage__` info in a file

def dump_js_coverage

return unless ENV["COVERAGE"]

page_coverage = page.evaluate_script("JSON.stringify(window.__coverage__);")

return if page_coverage.blank?

# we will store one `js-....json` file for each system test, and we save all of them in the .nyc_output dir

File.open(Rails.root.join(".nyc_output", "js-#{Random.rand(10000000000)}.json"), "w") do |report|

report.puts page_coverage

end

end

Then we have to attach a hook to execute that function after each system test ends. Depending on the test runner you use, this is done in different places.

For MiniTest:

# test/application_system_test_case.rb

require "test_helper"

class ApplicationSystemTestCase < ActionDispatch::SystemTestCase

driven_by :selenium, using: :chrome, screen_size: [1400, 1400]

def before_teardown

dump_js_coverage

end

end

If you use other types of tests with JavaScript (like Feature tests or Integration tests) you may want to add the before_teardown hook to those types too.

For RSpec:

# spec/rails_helper.rb

RSpec.shared_context "dump JS coverage" do

after { dump_js_coverage }

end

RSpec.configure do |config|

config.include_context "dump JS coverage", type: :system

...

Note that this is a workaround for an issue we found. Ideally, you would use a

config.after(:each, type: :system) doblock here, but theafterhook is triggered too late so we are using this workaround runningdump_js_coverageusing a shared context.

Also note that we are collecting the test coverage at the end of the example extracting the information for the

window.__coverage__object. If, during a single example, you callvisitagain or do an action that clears that information, then it won’t be complete at the end of the example (if you use something like Turbo or Turbolinks, it should be fine since it will replace the standard HTTP requests with ajax requests, keeping the__coverage__object between page changes).If your test happens to change pages and you lose the coverage, you may need to find some workarounds (like manually adding a call to

dump_js_coveragebefore any call to thevisitmethod or add a wrapper around calls to the clickclick_linkmethod or actions that trigger form submissions). There are too many edge cases to cover those in this article.

JS Coverage Data Merge After All Tests

After we finish running all the tests, we have to merge all the single test results into one JS report file. To manage the reports we will use the nyc command from Istanbul.

First we have to install it using yarn add nyc --dev.

Then, we add a .nycrc configuration file with this content:

{

"report-dir": "js-coverage"

}

This file will tell nyc where to put the generated report.

Now, we have to add script in the package.json file:

"scripts": {

"coverage": "nyc merge .nyc_output .nyc_output/out.json & nyc report --reporter=html"

}

This script does 2 things:

- it merges all the files under .nyc_output as one

out.jsonfile - it then generates an HTML report using that generated file

With this script in place, we can run yarn coverage to generate an HTML report in the js-coverage folder.

Finally, we have to run this script at the end of the test suite execution. To do so, we will use Simplecov’s at_exit block to run the yarn coverage script. We can update the .simplecov file with this content:

# .simplecov

SimpleCov.at_exit do

# process simplecov ruby report

# this is the default if no `at_exit` block is configured

SimpleCov.result.format!

# process istanbul js report

system("yarn coverage")

end

SimpleCov.start "rails"

The Reports

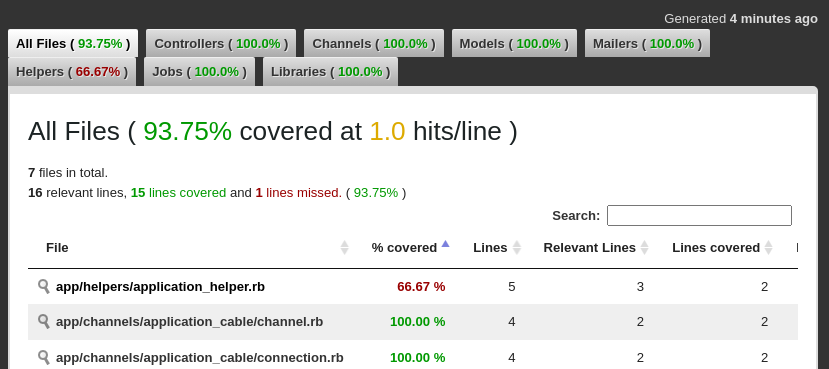

After all tests are executed, it generates both the Ruby coverage (stored in the coverage folder):

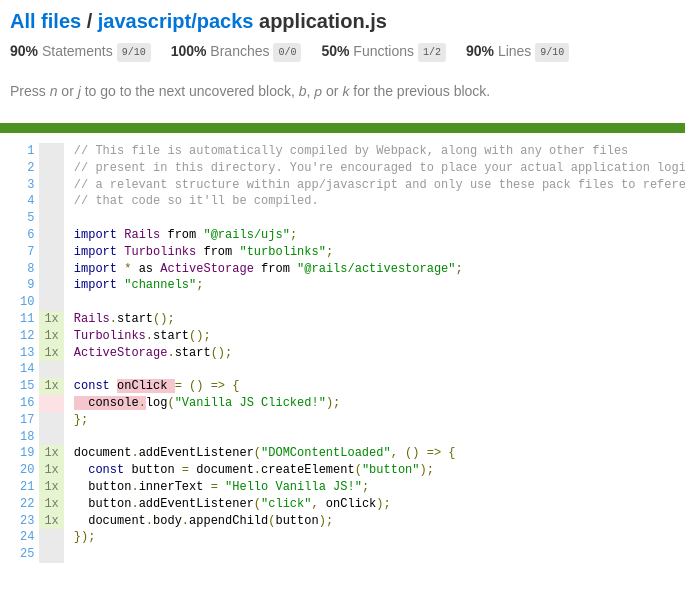

And the JavaScript coverage (stored in the js-coverage folder):

Troubleshooting

Simplecov and Parallelization

Simplecov has known issues calculating code coverage when tests are run in parallel which is the default with MiniTest https://github.com/simplecov-ruby/simplecov/issues/718.

A workaround is to disable parallelization if we want to get the Ruby test coverage:

# test/test_helper.rb

ENV["RAILS_ENV"] ||= "test"

require_relative "../config/environment"

require "rails/test_help"

class ActiveSupport::TestCase

# Run tests in parallel with specified workers

workers = ENV["COVERAGE"] ? 1 : :number_of_processors

parallelize(workers: workers)

# Setup all fixtures in test/fixtures/*.yml for all tests in alphabetical order.

fixtures :all

# Add more helper methods to be used by all tests here...

end

Last Tip

It’s a good idea to ignore some directories in your version control so you don’t push your coverage reports. Add these lines to your .gitignore file:

.nyc_output

coverage

js-coverage

Conclusion

The code coverage metric is not a direct indicator of a good test suite, but it is a good metric to show you which parts of your code are not being stressed by a test to guide you on which features are not being tested. With the JavaScript code coverage we can find places that we are not testing but users are directly interacting with. That way we can improve our tests to have a more robust codebase and also allows us to be more confident if we need to refactor the code or add any new feature.